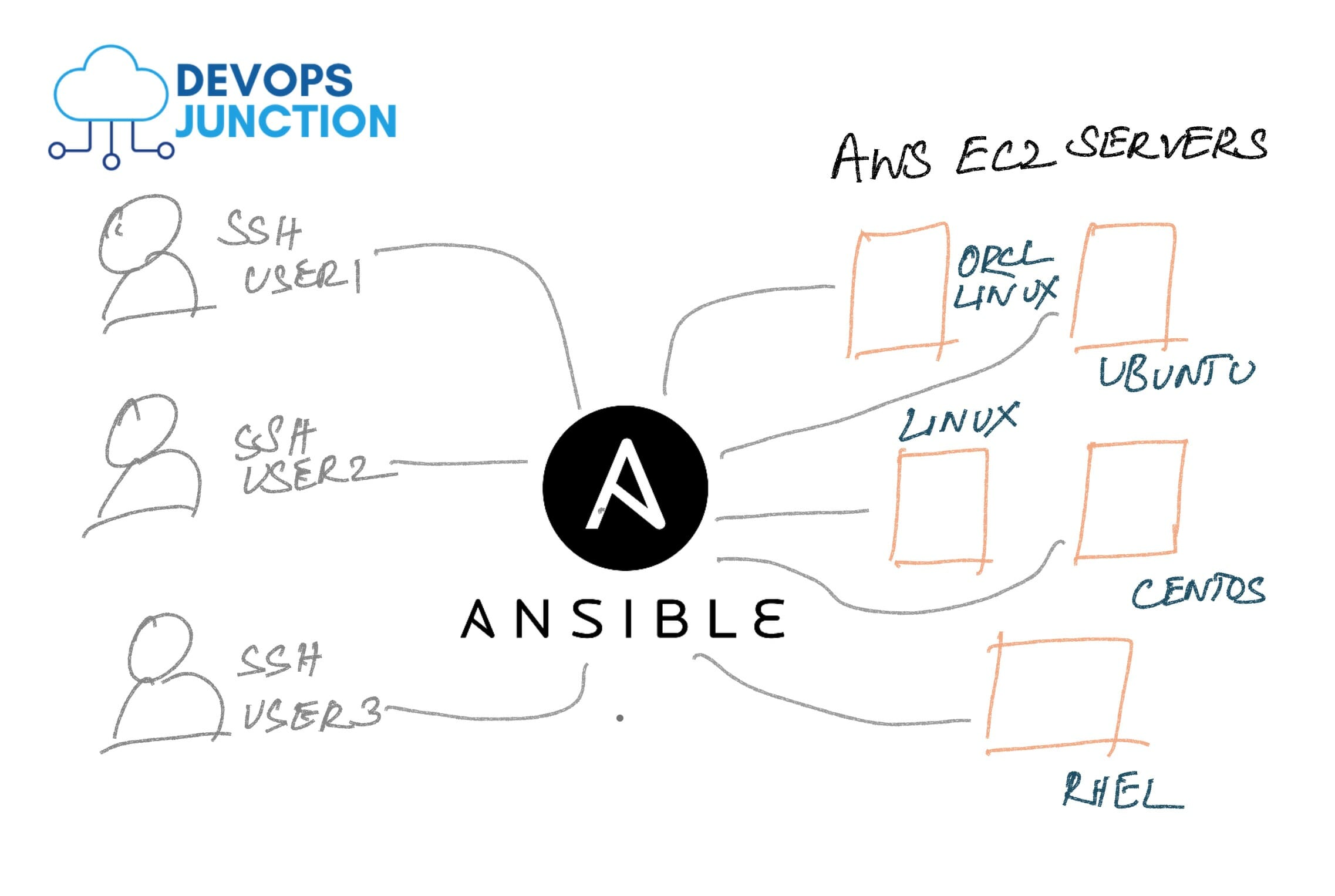

Whether it is On-Prem (or) Cloud-hosted, A Typical Non-Containerized Enterprise infrastructure would have ample of Virtual Machines aka Servers [ Linux ]

Let us suppose that you work for the DevOps team of a Big Organization where you manage 100+ ec2 instances. You have a new hire in your team and you ought to give him access to all these boxes/machines

Now you have two options to give the new user(s) their SSH access to EC2 servers.

- Copy the user's public key to all the server's default user (ubuntu, centos, ec2-user, admin) - Discussed in this article

- Create a user ID for the new user[s] and copy their SSH public key for them to login with their own user ID and SSH Private Key - Refer our another article

In this post, we are discussing how to add a user's SSH public key to the default user-id of the remote EC2 servers. the remote servers can be different distributions and have different user ids or the same.

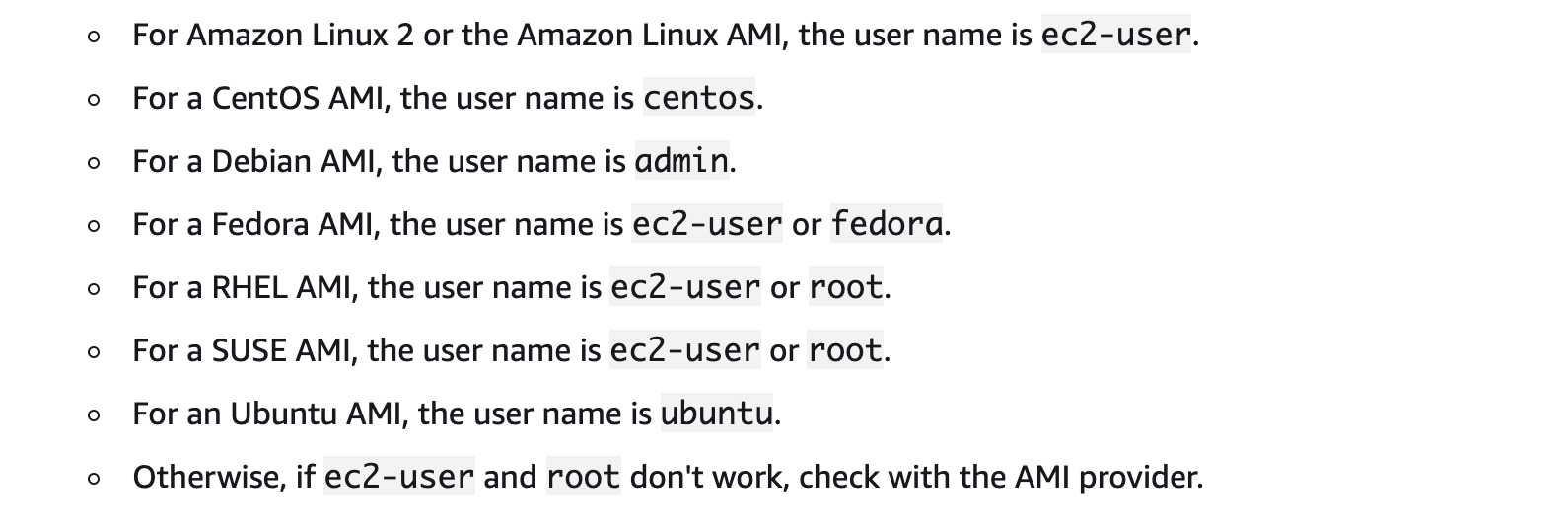

Ec2 servers have different default user'ids based on the AMI being used. Refer the following screenshot taken from Amazon Documentation

The objective of this article is to add a new SSH Key to Multiple EC2 instances at the same time. How are we going to do it?

I am an ansible fan for its capability and simplicity and this post is about one thing which is to give a new member access to the existing EC2 instances.

We may refer to this in different ways as follows.

How to add SSH Key to EC2 instance, Add new Key pair to EC2 instance, EC2 add Key to instance, add keypair to existing EC2 instance, Add SSH key to Ec2 with ansible etc

What are we going to do?

Add a user SSH key into the running EC2 instances. We are going to use Ansible to add new EC2 SSH Key to multiple EC2 instances at the same time.

Basically, we are copying the user public key and adding it to the authorized_host file of the default remote user of EC2 instances such as ubuntu , centos, ec2user etc.

This playbook can help you add SSH Key to multiple servers at the same time. the servers can be different in distribution (RHEL, ubuntu, Centos) or the same.

What we are not going to do

It is also important that you understand that We are not creating any new user in the remote server.

We are going to simply add the public Keys under the authorized_users file of a single user on the EC2 instance.

Simply put, This post presumes that multiple users are using the same account (or) default account. we are not creating any new user ID on the remote system.

If you would like to create a dedicated user account and copy their SSH key into their own user ID on the EC2 instance - Refer to our another article.

How we are going to do?

Using Ansible and its authorized_key module. This playbook serves as an example to authorized_key module of ansible.

What you might need

- A Private Key of a key pair of your AWS account, associated with the instances to which you are going to add the Key

- Ansible Control machine ( A machine with Ansible installed)

Steps to Add SSH Key to EC2 Instances

- Copy the playbook (or) Clone our Git Repo

- Get your Desired instance's IP/hostname into Ansible inventory file aka hosts file.

- Run the playbook.

- SSH to verify.

Step1: Create a Playbook

This is playbook we are going to use you can copy this to your local (or) get it from Github repo https://github.com/AKSarav/Add-SSH-Key-EC2-Ansible.git

Copy this content and save it as add-key.yml or clone the preceding Git repo.

---

- name: "Playbook to Add Key to EC2 Instances"

hosts: hosts_to_add_key

vars:

- status : "present"

- key : "~/.ssh/id_rsa.pub"

tasks:

- name: "Copy the authorized key file from"

authorized_key:

user: "{{ansible_user}}"

state: "{{status}}"

key: "{{ lookup('file', '{{ key }}')}}"

Here we have defined a few variables

status - to indicate whether to keep or remove the SSH Key from the remote server. ( Add or Delete)

key - The default value for the SSH Key file, If not mentioned at the run time your current user's default public key would be copied to the remote server.

ansible_user - A remote machine or EC2 instance user name which would be given at the runtime as well.

Step2: Add the List of EC2 Public IPs/Private IPs into the Ansible inventory file

If you have cloned my Git repo, You would have ended up a directory structure similar to what is shown below.

➜ ansible tree ec2-addkey ec2-addkey ├── add-key.yml └── ansible_hosts 0 directories, 2 files

Beneath the main directory named ec2-addkey there are two files as you can see.

One is the playbook named add-key.yml and the other is the inventory file named ansible_hosts

Here is the content of the inventory file

[hosts_to_add_key]

34.83.178.254

104.12.32.122

[hosts_to_add_key:vars]

ansible_ssh_common_args="-o StrictHostKeyChecking=no"

Here, I have added two of my public IPs ( sample ) to which I need to add some new SSH Key, You can replace the IPs with your actual ones but the hostgroup should not be changed unless you change them in a playbook as well.

You can use your hostnames or Private IP addresses if you have setup your Amazon Infra with VPN and/or Route 53 Private Zone DNS. Sign up to mailing list. I am writing an article about this already.

Now. we have the list of servers under the ansible hostgroup named hosts_to_add_key and we have set some generic variables to instruct ansible to ignore hostname verification.

Step3: Run the playbook.

Now it is time to run the playbook with necessary run time variables. Ansible playbook overrides all the inline variables with the ones given at runtime also known as extra args

Here is the basic syntax on how to righteously execute this playbook.

ansible-playbook add-key.yml

-i ansible_hosts

--user <remote user name>

--key-file <Your_Private_key_file.pem>

-e "key=<User's public key file full qualified path>"

Now let's pretend that these two IPs belong to an RHEL Virtual machine with default user of ec2-user

ansible-playbook add-key.yml -i ansible_hosts --user

ec2-user

--key-file SaravAK-Privatekey.pem

-e "key=/tmp/rohit_new_employee.pub"

In case if the servers are UBUNTU machines, then we need to change the base user to ubuntu as shown below.

ansible-playbook add-key.yml -i ansible_hosts --user

ubuntu

--key-file SaravAK-Privatekey.pem

-e "key=/tmp/rohit_new_employee.pub"

If you want to use different distributions of remote machines like linux, centos, ubuntu at the same time. scroll down to see the another approach.

Here is the Brief Video recording of me running this playbook and explaining the steps. Refer this if you have any questions.

Step4: SSH to verify

You can ask the new user now to try to SSH with his private key

If the Security Group is allowing the SSH connection. the newly added user should be able to SSH to the server henceforth using his private key.

ssh -i <user's privatekey file> <username>@<public ip of the AWS instance>

You can refer the video if you have questions on how to SSH.

How to use different Linux Distributions at the same time Linux, RHEL, Ubuntu

The whole purpose of this playbook is to let you be able to add SSH Key into multiple EC2 instances at the same time.

So we got it covered.

You have to make two changes prior to execution comparing to the previous process

Change1: Define the variables for each host like user, port number etc.

[hosts_to_add_key] 54.34.23.112 ansible_ssh_port=2222 ansible_user=ubuntu server1.gritfy.local ansible_user=ec2-user webserver.gritfy.com ansible_user=centos [hosts_to_add_key:vars] ansible_ssh_common_args="-o StrictHostKeyChecking=no"

Change2: Run the playbook without – user at run time

You do not have specify the user at run time since you have mentioned it on the ansible inventory configuration or host file for each host.

ansible-playbook add-key.yml

-i ansible_hosts

--key-file SaravAK-Privatekey.pem

-e "key=/tmp/rohit_new_employee.pub"

How to remove the SSH Public Key (or) user using this Playbook - Deleting user access

To remove the Public Key you have just added (or) to revoke the Access of the employee you have added earlier. All you have to do is pass an additional argument to the playbook and inverse the action it does.

If you have noticed the playbook. there is a variable named status which is set to present which is to tell ansible that the key has to be added.

To tell ansible to remove it. you have to change the status to absent at the run time. thats it.

You can use the following command to change it at the run time without modifying the Ansible Playbook.

ansible-playbook add-key.yml -i ansible_hosts --user

ubuntu

--key-file SaravAK-Privatekey.pem

-e "key=/tmp/rohit_new_employee.pub status=absent"

Hope this helps.

Do you have any requirements where you need our professional assistance write an email to [email protected] .

We like challanges just the way you do.

Let me know your comments over the comments section.

Cheers

Sarav AK

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content