NGINX has become a standard for reverse proxy implementation, At least 20% of the internet is powered by NGINX webserver as the stats suggest

I presume you all know what is a reverse proxy and forward proxy and how it works.

Let us move on to the objective of this article.

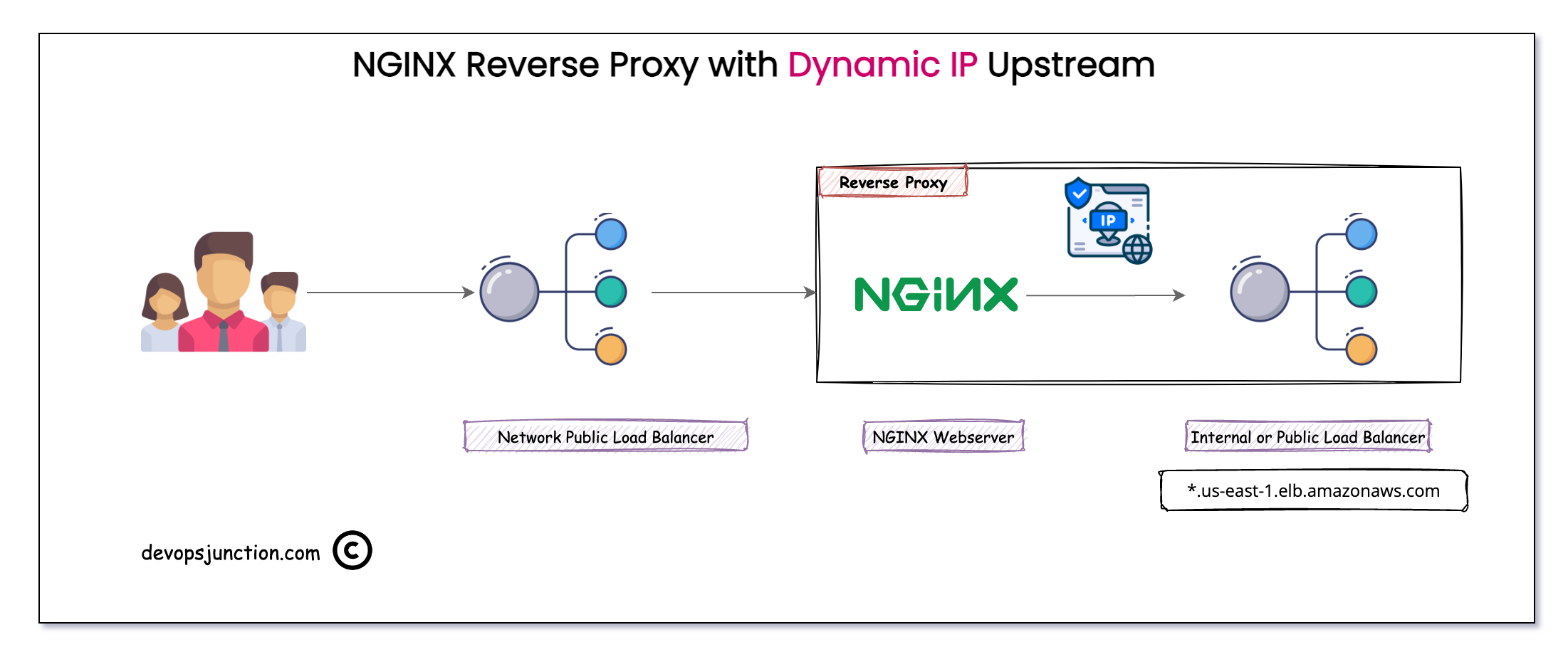

NGINX reverse proxy to Dynamic Upstream

Today we are going to see a specific use case where the backend or upstream is dynamic in nature.

For example, consider the following example

We have an NGINX reverse Proxy connecting to some Internal or Public load balancers in the AWS ecosystem

Hope you are aware that the Application / Classic (Layer7) load balancers in AWS are dynamic in nature and the IP address is not static. Load Balancer change its operating IP address at times.

That's why they always give you the DNS name instead like this

internal-api-19c91c.us-east-1.elb.amazonaws.com

You can see that every public or internal load balancer have got a domain name like this not a static IP

If you try to dig or nslookup this domain name you would notice that this domain is backed by DNS round robin with multiple IP addresses

In terms of Network architecture and design, this is great.

but when you are trying to use NGINX as a reverse proxy and use one of these load balancers as the backend or upstream, there comes a problem

NGINX cache the backend/upstream IP address for its life time. Until reload or restart

Just like any network system, NGINX cache the backend or upstream IP address to reduce the DNS lookup calls and thereby increase the performance.

As the saying goes "there is no single solution that fixes/fits all", so in this corner case scenario this caching IP address could cause a production outage or downtime

But How?

When NGINX cache the IP address, it would continue to send the calls to that IP even if the backend ( in our case ALB) changes its IP address

So what happens when there is no backend/upstream to respond? the connection gets timed out with 504 or some 502 service unavailable error would be thrown

So how are we going to address it

The Solution to the NGINX Dynamic IP upstream ( tested in production)

Let's say you would normally configure your reverse proxy like this

You assign the backend or upstream server to a variable named upstream_endpoint

or directly use the URL in the proxy_passas follows

proxy_pass http://$upstream_endpoint/notifications/api/v1$1; or proxy_pass http://internal-api-19b919-.us-east-1.elb.amazonaws.com/api/v1$1;

The complete nginx configuration file may look like this

{

server {

set $upstream_endpoint internal-api-19b919-.us-east-1.elb.amazonaws.com;

listen 80;

location ~ (^.*$) {

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Server $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Url-Scheme $scheme;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_redirect off;

client_max_body_size 100m;

client_body_buffer_size 100m;

proxy_pass http://$upstream_endpoint/api/v1$1;

}

}

}

But this is going to lead to the same DNS caching problem.

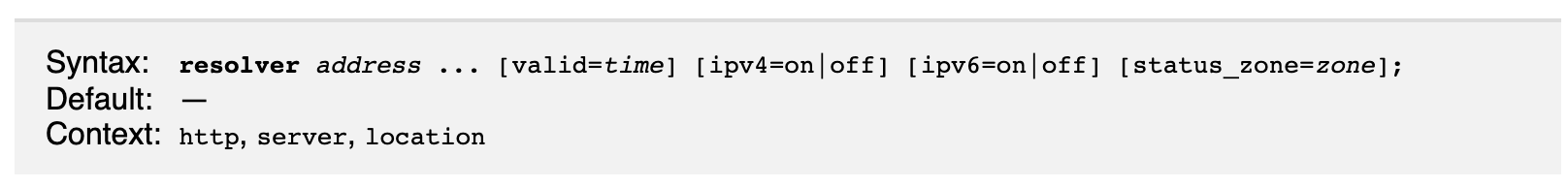

The Solution is simple, we just have to add a special directive named resolver into our nginx configuration

Here is the syntax of this directive

There is a special parameter available if you are using the Openresty version of NGINX

For Open Resty

resolver local=on valid=1s;

this resolver, instructs the Openresty nginx to use the local DNS cache and set the TTL for the cache to 1 second.

here the special local=on option enable the nginx to use the DNS servers specified in the /etc/resolver.conf file on the local or the local system resolver

This special option is not there with the typical NGINX so we need to make alternative

For Normal NGINX

In typical NGINX you would not have this local=on parameter as mentioned earlier

So you have to use the DNS server IP address directly

You can use the popular DNS servers like 8.8.8.8 or 8.8.4.4

resolver 8.8.8.8 valid=1s;

or you can check what is resolver you are using on the local machine by checking your /etc/resolv.conf file

the following command may help

cat /etc/resolv.conf | grep "nameserver" | awk '{print $2}' | tr '\n' ' '

You would get the IP address which you can use like this. In my case it is 10.0.0.2

resolver 10.0.0.2 valid=1s;

or if you know your DNS server IP and port number. you can use that as well directly

If you are in Kubernetes, you can use the kubeDNS itself as the resolver like this

resolver kube-dns.kube-system.svc.cluster.local valid=1s;

Since we are setting the TTL value to 1 second with valid=1s after every second DNS lookup would be done and the latest IP would be resolved.

You can optionally increase this valid attribute to a higher value

This way, you would never have the DNS cache issue with the backend or upstream

Here is the complete configuration for Open Resty

{

server {

resolver local=on valid=1s;

set $upstream_endpoint internal-api-19b919-.us-east-1.elb.amazonaws.com;

listen 80;

location ~ (^.*$) {

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Server $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Url-Scheme $scheme;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_redirect off;

client_max_body_size 100m;

client_body_buffer_size 100m;

proxy_pass http://$upstream_endpoint/notifications/api/v1$1;

}

}

}

Here is the complete configuration for NGINX

{

server {

resolver 10.0.0.2 valid=1s;

set $upstream_endpoint internal-api-19b919-.us-east-1.elb.amazonaws.com;

listen 80;

location ~ (^.*$) {

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Server $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Url-Scheme $scheme;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_redirect off;

client_max_body_size 100m;

client_body_buffer_size 100m;

proxy_pass http://$upstream_endpoint/api/v1$1;

}

}

}

To know more about this resolver directive refer to the following links

- http://nginx.org/en/docs/http/ngx_http_core_module.html#resolver

- https://github.com/openresty/openresty/#resolvconf-parsing

Cheers

Sarav AK

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content