In this article, we are going to see how to save the SNS messages to S3 using Kinesis Data Firehose

The first step of building a data engineering pipeline in your organization is storing the unorganized data with long retention and partitioning.

Therefore storing the SNS data with Long-term storage like S3 becomes a necessity.

Once your data is present and saved in S3, you can Transform your data with ETL or Data Engineering principles like Glue, DataLake etc and create meaningful insights

Before we move on to the objective, Let us see a quick introduction of SNS and Kinesis Data Firehose

SNS - Simple Notification Service

Amazon Simple Notification Service (SNS) is a fully managed messaging service for both application-to-application (A2A) and application-to-person (A2P) communication. The A2A pub/sub functionality provides topics for high-throughput, push-based, many-to-many messaging between distributed systems, microservices, and event-driven serverless applications. The A2P capabilities enable you to send messages to users on their preferred communication channels.

Kinesis Data Firehose ( Amazon Data Firehose)

Amazon Kinesis Data Firehose is a fully managed service for delivering real-time streaming data to destinations such as Amazon S3, Amazon Redshift, Amazon Elasticsearch Service, and Splunk. With Kinesis Data Firehose, you don't need to write applications or manage resources. You configure your data producers to send data to Kinesis Data Firehose, and it automatically delivers the data to the destination that you specified. It's a reliable way to load massive volumes of data into data lakes, data stores, and analytics services.

Creating DataFirehose with S3 destination

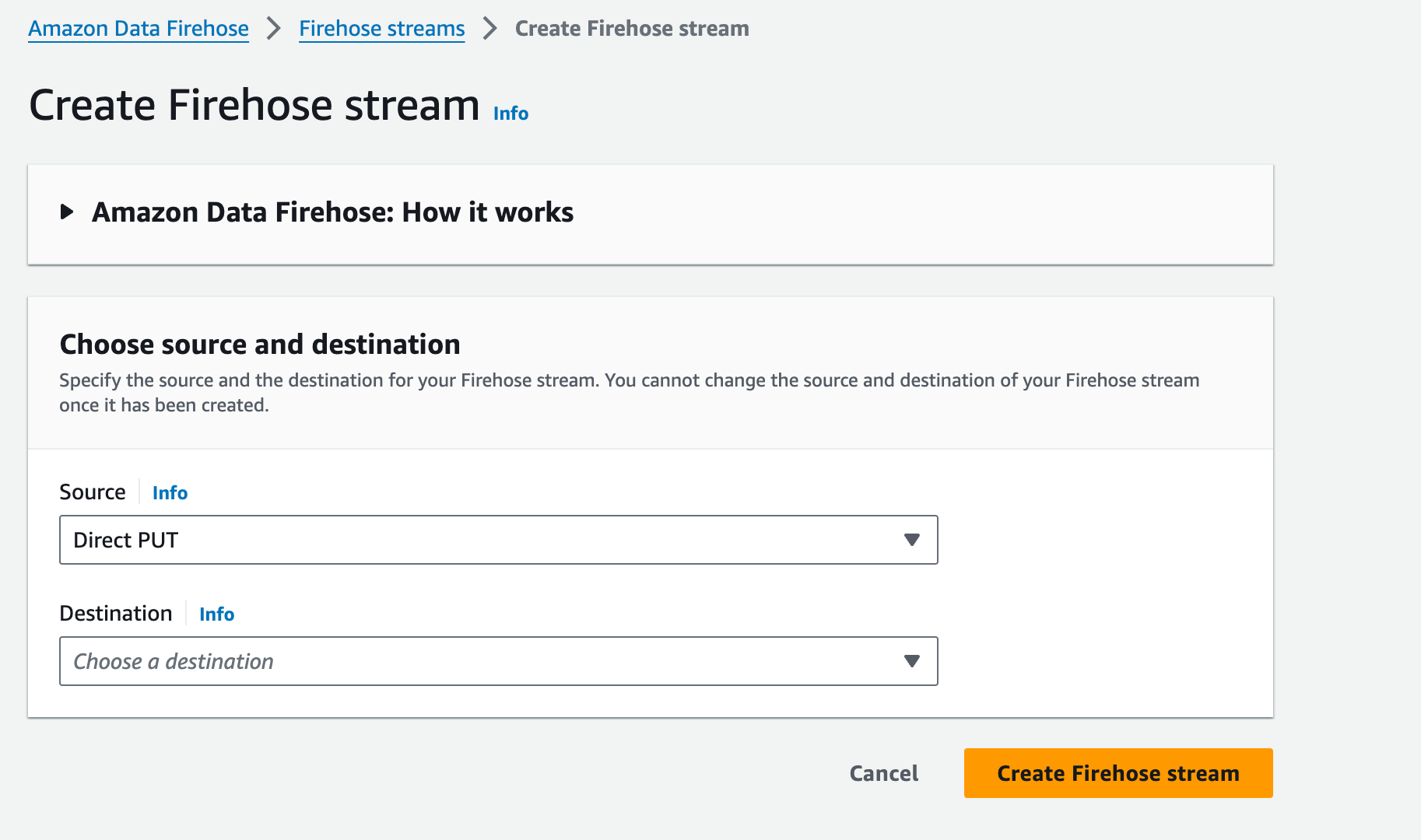

Search for Amazon Data Firehose in your AWS console and click on Create Firehose Stream

On the next screen - Select Direct Put as a Source and S3 as a destination

If you would like to transform your events before writing to S3 - you can attach a Lambda function as a Transformer but to keep things simple we are going without a transformer

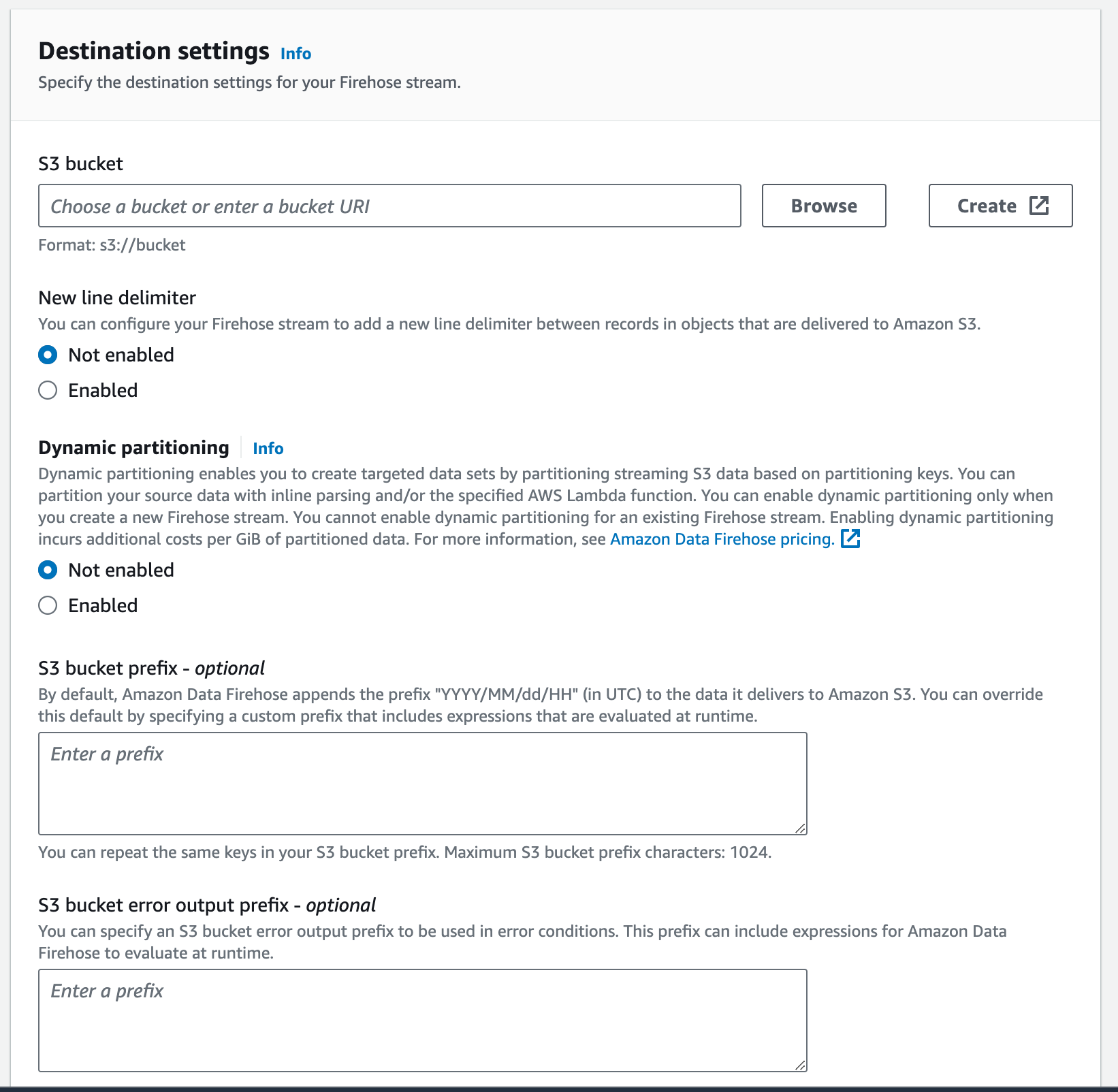

Go to Destination settings, Enter the S3 bucket-related information

You can enable Dynamic partioning based on conditions,

By default, Kinesis data firehose writes events to S3 with yyyy/mm/dd prefix multi-level directories - which would be sufficient in most cases

You can choose to enter an error output prefix, by default it would be written into a directory named processing-failed on the same S3 bucket

You can enable encryption , file format etc on the same page with the Advanced Settings configuration

Once you are done with the configuration customization, name your Stream and click on Create firehose stream

Now your firehose is ready - You just need to connect this firehose to the SNS

Creating an IAM role to enable SNS to write to Firehose

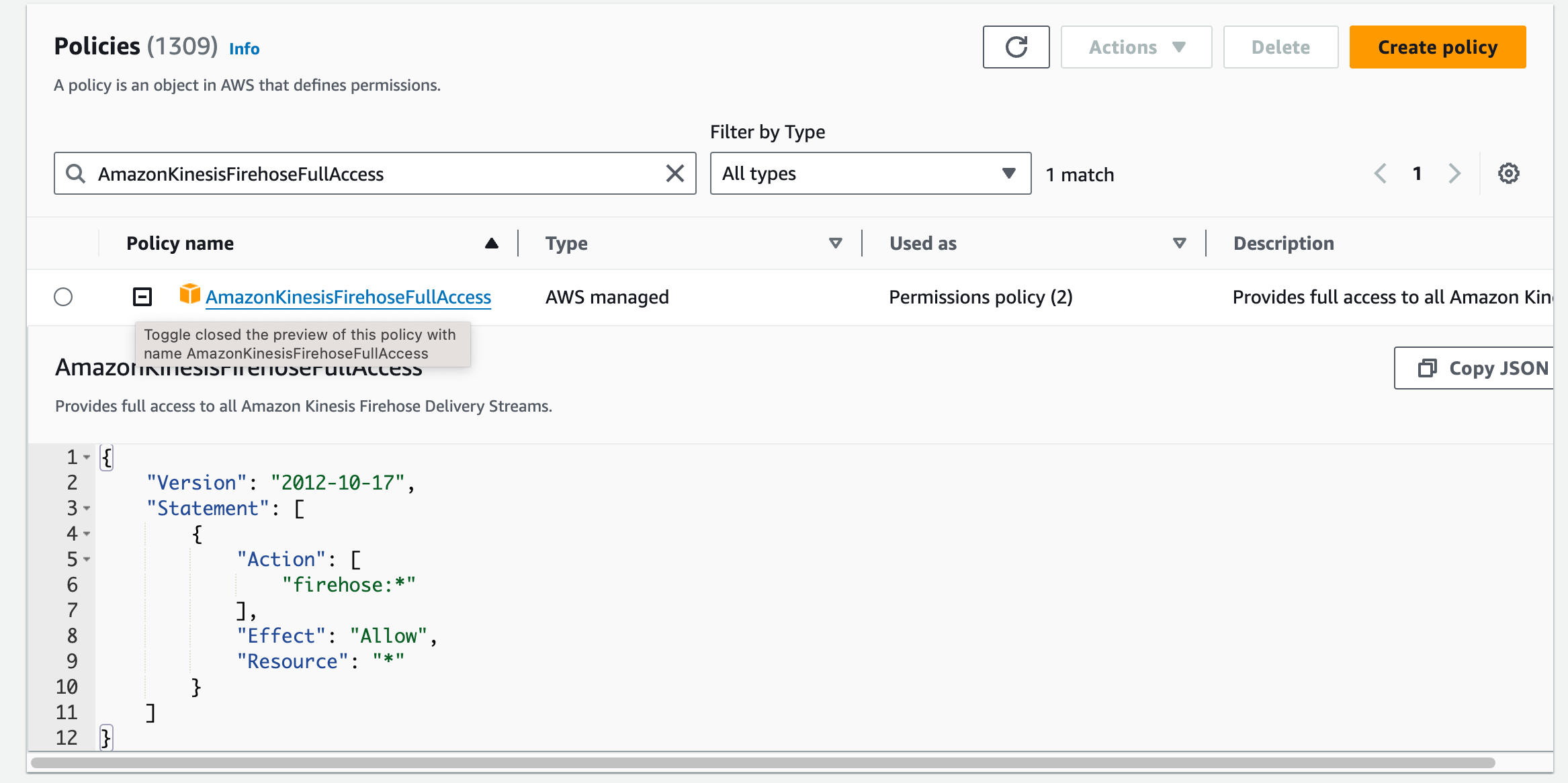

To connect SNS to Firehose the first prerequisite is a permission role, You can optionally create an IAM role with the policy `AmazonKinesisFirehoseFullAccess` or create your strict policy too

Either way - Go to IAM roles and click on Create role

If you choose to go with a prebuilt policy add the policy `AmazonKinesisFirehoseFullAccess` into a newly created role

Or you can choose to create a new policy or inline policy with the following JSON

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"firehose:DescribeDeliveryStream",

"firehose:ListDeliveryStreams",

"firehose:ListTagsForDeliveryStream",

"firehose:PutRecord",

"firehose:PutRecordBatch"

],

"Resource": [

"arn:aws:firehose:us-east-1:111111111111:deliverystream/firehose-sns-delivery-stream"

],

"Effect": "Allow"

}

]

}

Once your role is set with the policy - Now you can go ahead and you will need the ARN of this IAM Role in the next step

Connecting DataFirehose to SNS to enable S3 persistence

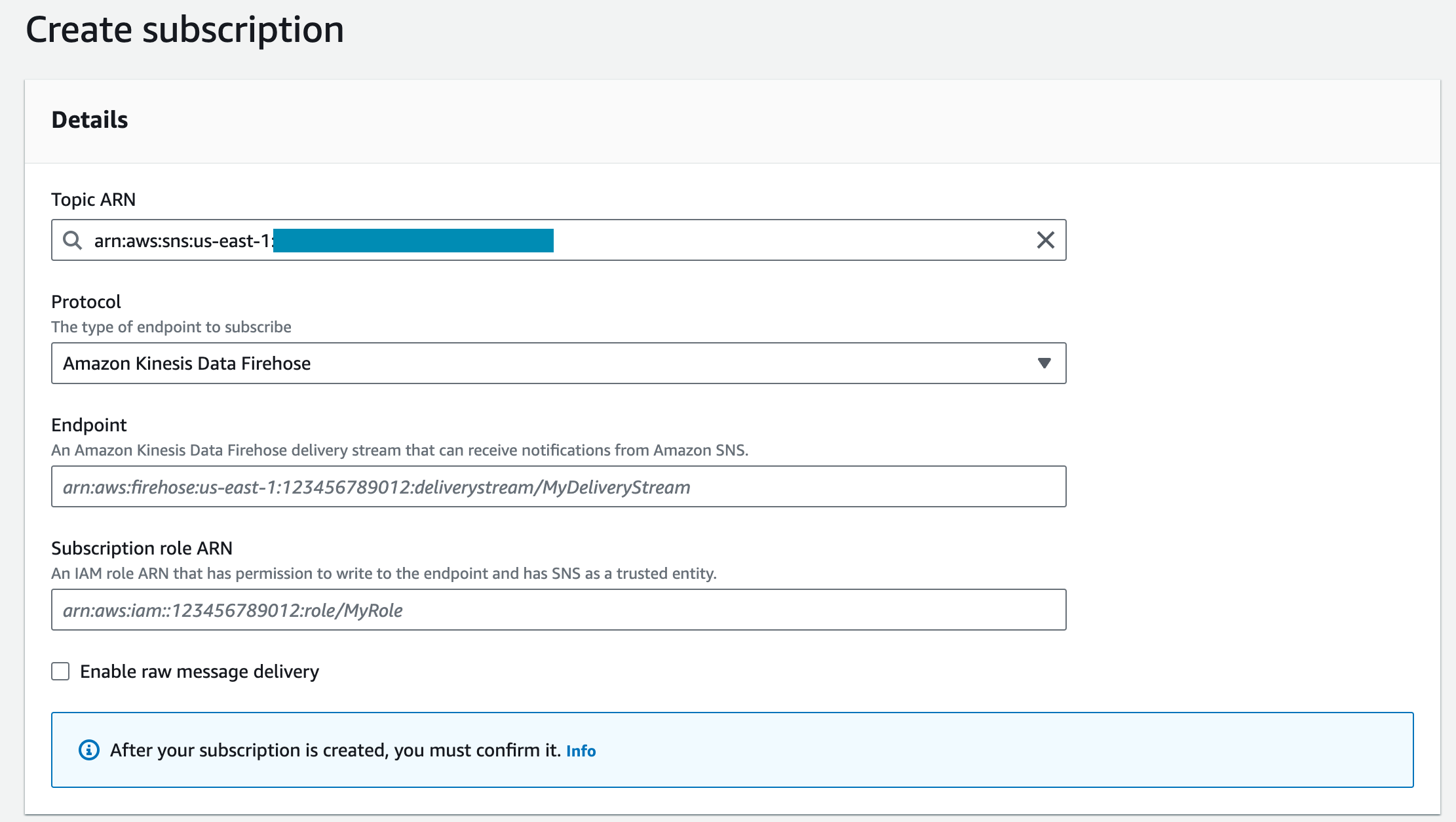

Goto SNS, Select your Topic of interest

Click on Create Subscription button

Choose Protocol as Kinesis Firehose from the dropdown

Fill the Firehose ARN in the Endpoint

In the Subscription Role ARN field - Enter the IAM role we have created in the previous section

You can filter selected SNS events using the Subscription Filter Policy

Let's assume that your SNS message has a JSON format and an event_type field - you can have a simple Filter policy written like this to filter only selected event_types like signup, sign in etc

{

"event_type": [

"SIGNUP"

"SIGNIN"

"SIGNOUT"

"ACCOUNT_DELETE"

]

}

It is good to choose a re-drive policy and have a dead letter SQS queue mapped to collect the failed events ( which failed to be pushed to Kinesis )

You can do this later too - as per your requirement by editing SNS subscriptions directly

Finally, click on the Create Subscription button and go ahead

Once the Subscription is ready - you can put a sample SNS message and validate if the message gets pushed to the S3 bucket

Monitoring and Observability

There are two ways to monitor this data flow pipeline from SNS to S3

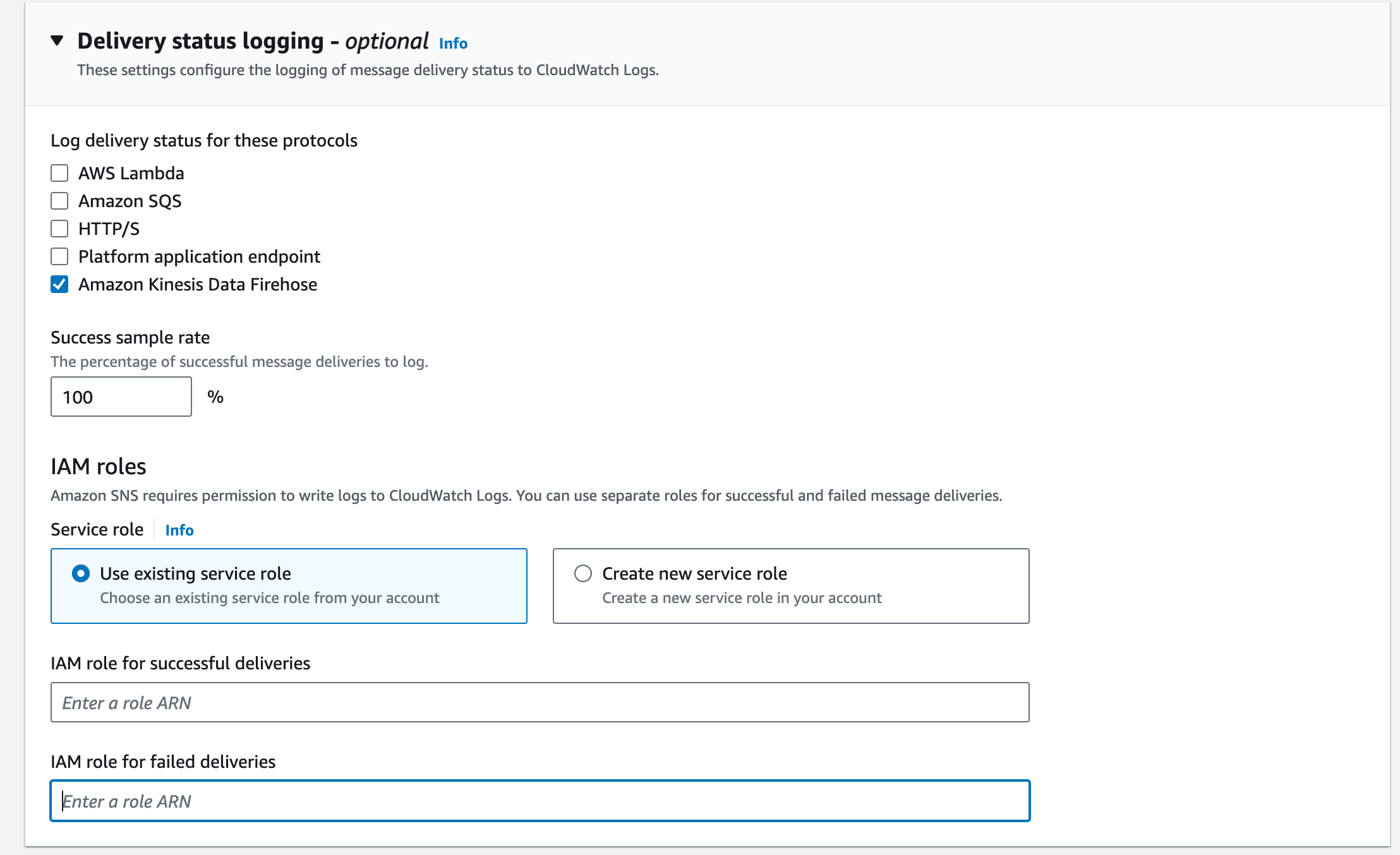

At the SNS level, you can do this with Delivery Status Logging

Read more about how to configure Delivery Status logging here in this AWS documentation

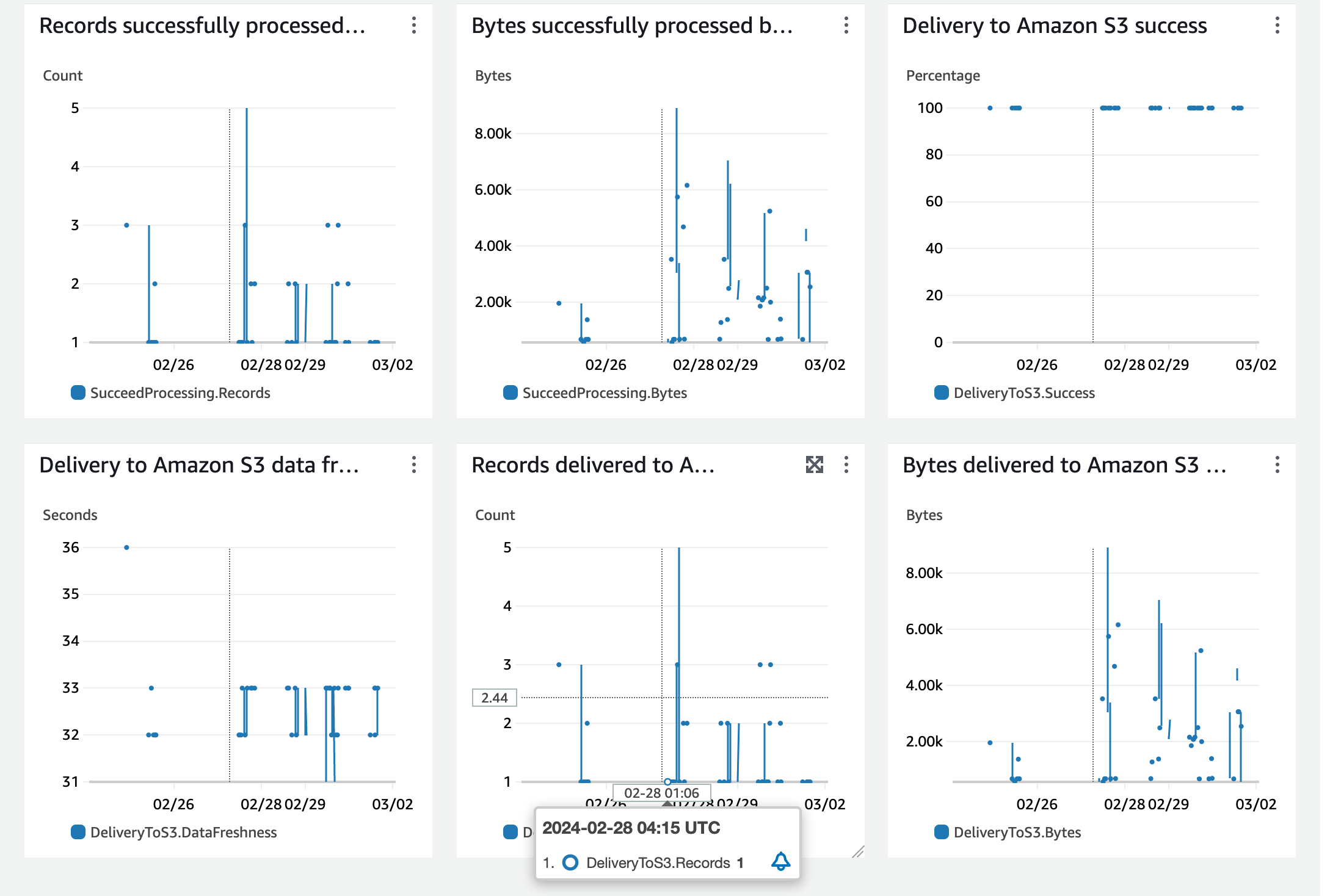

The second place without much configuration hustles to validate this setup is at the Kinesis firehose monitoring

Firehose comes with built-in monitoring where you can see the data flowing through kinesis to the destination S3 bucket

Go to Kinesis Firehose and select the Firehose event stream that you have created

Under the monitoring tab, you can see the following metrics

This is how you can connect your SNS to S3 with production-grade monitoring and observability and retry features with SQS dead letter queue

Hope this helps

let me know if you have any questions in the comments section

Cheers

Sarav AK

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content