Kubernetes Ingress

We understand that as per traditional infrastructure setup, in order to load balance the client requests you are required to configure instances for each application you want to balance the load, which makes your configuration lengthy, and when moving this architecture to open source technologies it will be more complex & expensive if we continue the same flow.

For instance, let's try to move this flow to Kubernetes cluster where you wish to deploy your user-facing application, you need to create a new load balancer for every service you want to access & need to singly map every service to load balancer which will complicate your configuration.

When you load balance your request based on the context path you need to modify lot many configurations at different level which can be an overhead task for your application developers. i.e. at each service, you need a load balancer which is not an optimal approach.

and what if you try a hybrid approach where you want to deploy k8s with a physical LB or F5 placed in your data centre, its ends ‘up with a more complicated structure.

But what if I say all this thing can be managed within a K8s cluster via another definition file. that’s where INGRESS comes into the picture. Ingress helps your user to access your app using a single externally accessible URL, that you can configure to route different services in your cluster based on URL pod, and also can implement SSL security at the same time.

With one underline condition: this feature of ingress available with k8s 1.2+ versions only.

What is Kubernetes Ingress?

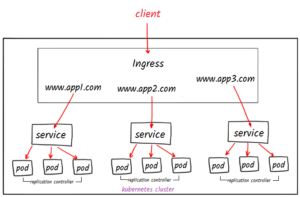

Ingress is a collection of rules that allow inbound connections to reach your cluster services, it is a logical controller that acts as a layered load balancer either from physical or cloud and is in-built in k8s cluster & configured like any native k8s service and apply set of rules that used to route the traffic dynamically to endpoints.

Typically it’s an API object that manages the routing of external HTTP/S traffic to services running in a k8s cluster. The crucial difference between nodejs service object, lb service object is ingress object is primarily concern with Layer 7 traffic in fact http/s traffic.

If you are from a web background you can consider this kubernetes ingress as the mod_proxy (or) mod_jk sitting on the apache httpd server to perform backend routing and load balancing.

How Kubernetes Ingress, works?

Ingress work on the principle of name & path-based virtual hosting (traffic routing pattern)

Name-based routing: It support routing HTTP traffic to multiple hostnames at the same IP address.

With client HTTP request coming to ingress controller, it then extracts the host header field from the request & based on the appropriate rule defining new ingress object routes the request to specific back-end service.

Path-based routing: with a single endpoint, you can hit multiple services/applications in the form of fanout-ingress and treat them as an integrated application.

NOTE: you cannot use ingress with clusterIP type service since clusterIP is made for internal cluster routing only not with the outside world. So that makes ingress to work only when service type used as nodePort.

**Few things to understand before demonstrating ingress**

The Three Ways to Make a Service accessible Externally.

Setting the Service type to Nodeport: services that need to be exposed to the outside world can be configured with the node port. In this method, each cluster node opens a port on the node itself (hence this name was given ) and redirects traffic received on that port to the underlying service

Setting the Service type to Loadbalancer: an extension of the NodePort type—This makes the service accessible through a dedicated load balancer, provisioned from the cloud infrastructure Kubernetes is running on. The load balancer redirects traffic to the node port across all the nodes. Clients connect to the service through the load balancer’s IP.

Creating an Ingress resource, a radically different mechanism for exposing multiple services through a single IP address. It operates at the HTTP level (network layer 7) and can thus offer more features than layer 4 services can. that's what we are going to see in this article

Why choosing Ingress over Loadbalancer

One important reason to choose Ingress over Loadbalancer is that each LoadBalancer service requires its own load balancer with its own public IP address,

whereas an Ingress only requires one, even when providing access to dozens of services. When a client sends an HTTP request to the Ingress, the host and path in the request determine which service the request is forwarded to

How to Create a Kubernetes Ingress Example setup.

Let's try to demonstrate ingress using path-based routing for 3 applications at a time where I used GKE to form a Kube cluster

Here deployment referred as Ingress-controller and configuration as Ingress-resource

** so quick recap: Ingress is a decoupling layer between internet and nodePort where it opens the cluster to receive external traffic, defining the traffic routes to backend services results to ensuring reliable and secure communication **

These are the steps we are going to performing to create the Kubernetes Ingress Path-based routing.

- Deploy a web application

- Expose your Deployment as a Service internally.

- Create an Ingress resource.

- Visit your applications.

- Serving multiple applications on a Load Balancer.

Step1: Deploying Web Application

Create deployment yaml files kind: Deployment

app1 - deployment yaml file

for this app1 we are using apache4 image

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: app1

namespace: default

spec:

selector:

matchLabels:

run: app1

template:

metadata:

labels:

run: app1

spec:

containers:

- image: punitporwal07/apache4ingress:1.0

imagePullPolicy: IfNotPresent

name: app1

ports:

- containerPort: 80

protocol: TCP

app2 - deployment yaml file

For this app2 we are using hello-app version 1 image from Google samples

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: app2

namespace: default

spec:

selector:

matchLabels:

run: app2

template:

metadata:

labels:

run: app2

spec:

containers:

- image: gcr.io/google-samples/hello-app:1.0

imagePullPolicy: IfNotPresent

name: app2

ports:

- containerPort: 8080

protocol: TCP

app3 - deployment yaml file

For this app3 we are using hello-app version 2 image from Google samples

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: app3

namespace: default

spec:

selector:

matchLabels:

run: app3

template:

metadata:

labels:

run: app3

spec:

containers:

- image: gcr.io/google-samples/hello-app:2.0

imagePullPolicy: IfNotPresent

name: app3

ports:

- containerPort: 8080

protocol: TCP

Step2: Expose your Deployment as a Service internally.

Exposing deployment as a service NodePort type kind : Service

Service1 deployment YAML file

this service is for the app1 with apache container on port 80

apiVersion: v1

kind: Service

metadata:

name: app1

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: app1

type: NodePort

Service2 deployment yaml file

this service is for app2 with google sample app version1 container on port 8080

apiVersion: v1

kind: Service

metadata:

name: app2

namespace: default

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

run: app2

type: NodePort

Service3 deployment yaml file

this service is for app3 with google sample app version2 container on port 8080

apiVersion: v1

kind: Service

metadata:

name: app3

namespace: default

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

run: app3

type: NodePort

Step3: Create an Ingress resource.

Creating ingress resource with kind: Ingress

Ingress resource yaml file

you could see that we are defining a path based routing and mapping a backend service name and service port

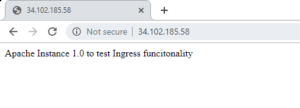

for the path / it goes to app1 service on port 80

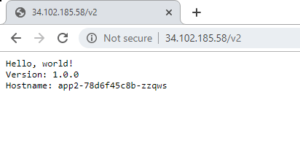

for the path /v2 it goes app2 service on port 8080

for the path /v3 it goes app3 service on port 8080

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: 3app-ingress

labels:

app: my-docker-apps

annotations:

spec:

rules:

- http:

paths:

- path: /

backend:

serviceName: app1

servicePort: 80

- path: /v2

backend:

serviceName: app2

servicePort: 8080

- path: /v3

backend:

serviceName: app3

servicePort: 8080

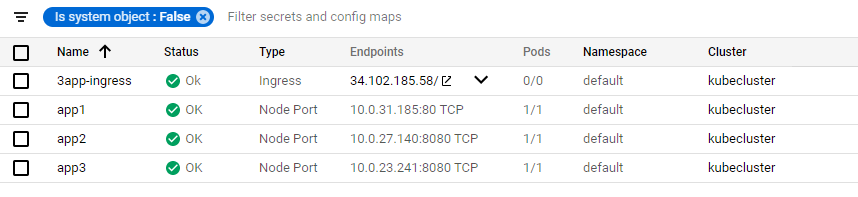

Ingress & services will look like on google cloud dashboard

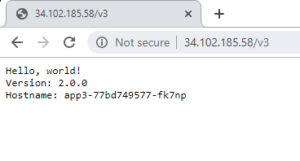

Step4: Visit your applications.

Notice all three services share the Same IP, Unlike the Loadbalancer type where we need dedicated IP addresses.

And when you access ingress endpoint as defined in ingress definition.

you will be able to access all three different application via a single endpoint, just changing context path as defined

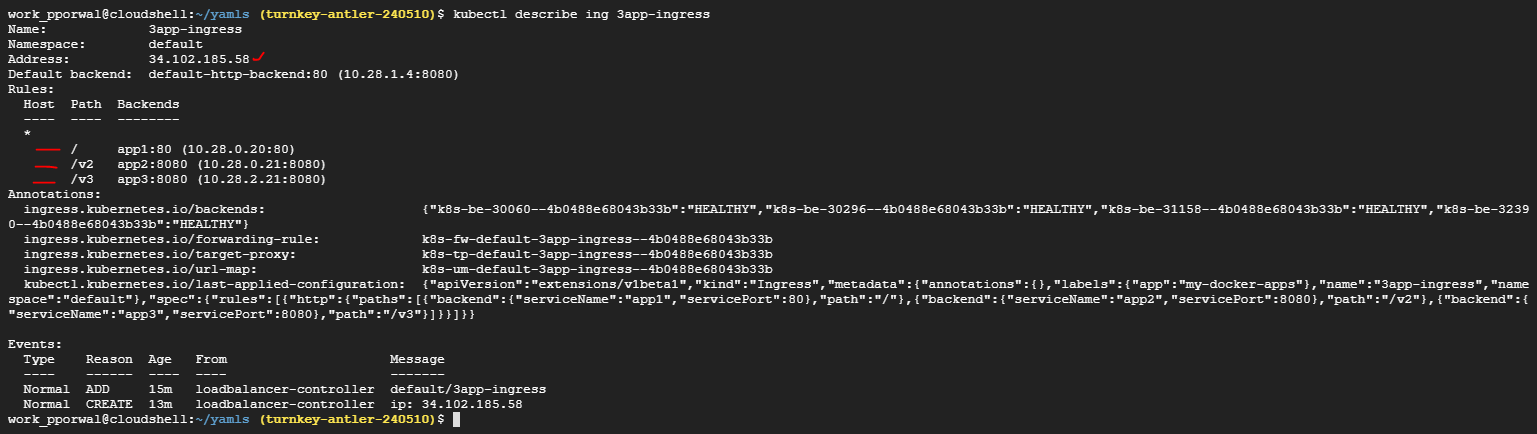

Step5: Serving multiple applications on a Load Balancer.

The following command would show the internals of the created Kubernetes Ingress

$ kubectl describe ing 3app-ingress

you will see description of ingress definition

Crafted By

Punit

Follow me on Linked In

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content