In this article, we will see an example of how to use ebs_block_device mapping with AWS EC2 instance

This article would use the template of Multiple EC2 instance creation using for_each and count together.

It is highly recommended that you read our previous article on how to create multiple ec2 instances with different configurations before following this

This can be considered as the upgraded version of the Terraform Manifest discussed in the aforementioned article

This article is specially designed to cover Terraform ebs_block_device mapping and Terraform root_block_devicemapping with multiple EC2 instance creation

We have also covered how to add multiple Terraform ebs_block_device mappings while creating EC2 instances in this terraform manifest

Let's go ahead.

Creating the tfvars file with server configuration and EBS Volumes

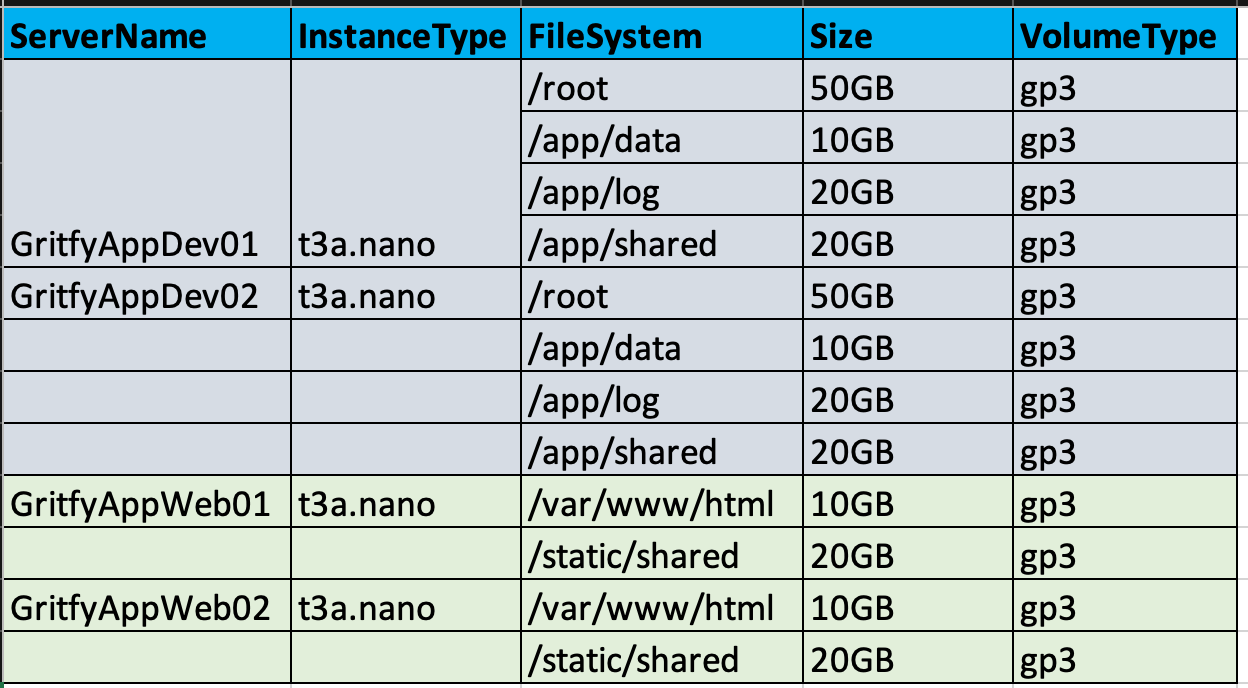

This is the configuration we are trying to achieve.

As you can see, we are trying to create Multiple EC2 instances with different and multiple EBS Volumes per EC2 instance

The Same configuration in the preceding table is put in the form of tfvars file

configuration = [

{

"application_name" : "GritfyAppDev",

"ami" : "ami-0c02fb55956c7d316",

"no_of_instances" : "2",

"instance_type" : "t3a.nano",

"subnet_id" : "subnet-0f4f294d8404946eb",

"vpc_security_group_ids" : ["sg-0d15a4cac0567478c","sg-0d8749c35f7439f3e"],

"root_block_device": {

"volume_size": "30",

"volume_type": "gp3"

"tags": {

"FileSystem": "/root"

}

}

"ebs_block_devices":[

{

"device_name" : "/dev/xvdba"

"volume_size": "10"

"volume_type": "gp3"

"tags": {

"FileSystem": "/hana/data"

}

},

{

"device_name" : "/dev/xvdbb"

"volume_size": "20"

"volume_type": "gp3"

"tags": {

"FileSystem": "/hana/log"

}

},

{

"device_name" : "/dev/xvdbc"

"volume_size": "20"

"volume_type": "gp3"

"tags": {

"FileSystem": "/hana/shared"

}

},

]

},

{

"application_name" : "GritfyWebDev",

"ami" : "ami-0c02fb55956c7d316",

"no_of_instances" : "2",

"instance_type" : "t3a.nano",

"subnet_id" : "subnet-0f4f294d8404946eb",

"vpc_security_group_ids" : ["sg-0d15a4cac0567478c","sg-0d8749c35f7439f3e"],

"root_block_device": {

"volume_size": "30",

"volume_type": "gp3"

"tags": {

"FileSystem": "/root"

}

}

"ebs_block_devices":[

{

"device_name" : "/dev/xvdba"

"volume_size": "10"

"volume_type": "gp3"

"tags": {

"FileSystem": "/hana/data"

}

},

{

"device_name" : "/dev/xvdbc"

"volume_size": "20"

"volume_type": "gp3"

"tags": {

"FileSystem": "/hana/shared"

}

},

]

}

]

This is the vars file we are going to use for creating 4 machines with different EBS volumes of different sizes.

Now let us take a look at the main Terraform manifest file that uses this vars file and creates the EC2 instances

The Terraform main.tf file to create Multiple EC2 with EBS Volumes

So here is the main.tf file which processes the tfvars file and creates multiple EC2 instances with multiple EBS volumes and with different configurations

provider "aws" {

region = "us-east-1"

profile = "personal"

}

locals {

serverconfig = [

for srv in var.configuration : [

for i in range(1, srv.no_of_instances+1) : {

instance_name = "${srv.application_name}-${i}"

instance_type = srv.instance_type

subnet_id = srv.subnet_id

ami = srv.ami

rootdisk = srv.root_block_device

blockdisks = srv.ebs_block_devices

securitygroupids = srv.vpc_security_group_ids

}

]

]

}

// We need to Flatten it before using it

locals {

instances = flatten(local.serverconfig)

}

resource "aws_instance" "web" {

for_each = {for server in local.instances: server.instance_name => server}

ami = each.value.ami

instance_type = each.value.instance_type

vpc_security_group_ids = each.value.securitygroupids

key_name = "Sarav-Easy"

associate_public_ip_address = true

user_data = "${file("init.sh")}"

subnet_id = each.value.subnet_id

tags = {

Name = "${each.value.instance_name}"

}

root_block_device {

volume_type = each.value.rootdisk.volume_type

volume_size = each.value.rootdisk.volume_size

tags = each.value.rootdisk.tags

}

dynamic "ebs_block_device"{

for_each = each.value.blockdisks

content {

volume_type = ebs_block_device.value.volume_type

volume_size = ebs_block_device.value.volume_size

tags = ebs_block_device.value.tags

device_name = ebs_block_device.value.device_name

}

}

}

output "instances" {

value = "${aws_instance.web}"

description = "All Machine details"

}

It must be a little confusing at the beginning, what exactly this terraform manifest is doing.

to understand this better. I strongly recommend you to read the other article given at the beginning of this article

Here I have given the link here once again

Inspecting the variables using terraform console

I hope you have read the article and understood what local.serverconfig variable does and why we are reading the configuration from the tfvars using foreach and for together.

To understand what these variables are holding during the runtime.

I am going to use terraform console and try to inspect the variables before I apply the changes

Here is what the local.serverconfig looks like

> local.serverconfig

[

[

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/hana/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbb"

"tags" = {

"FileSystem" = "/hana/log"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/hana/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyAppDev-1"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/hana/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbb"

"tags" = {

"FileSystem" = "/hana/log"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/hana/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyAppDev-2"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

],

[

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/web/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/web/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyWebDev-1"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/web/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/web/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyWebDev-2"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

],

]

as you can see it is kind of messed up with multiple nested lists/array [ [] [] [] ]

So we are flattening it and assigning the output to local.instances

Let us inspect the value of local.instances too using terraform console

> local.instances

[

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/hana/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbb"

"tags" = {

"FileSystem" = "/hana/log"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/hana/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyAppDev-1"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/hana/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbb"

"tags" = {

"FileSystem" = "/hana/log"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/hana/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyAppDev-2"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/web/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/web/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyWebDev-1"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/web/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/web/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyWebDev-2"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

},

]

as you can see, the list is now flattened ( where it has to be flattened)

This is the final variable that would go to aws_instance and be parsed using for_each

If you take a single element/item from the local.instances list it looks like this

{

"ami" = "ami-0c02fb55956c7d316"

"blockdisks" = [

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/web/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

},

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/web/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

},

]

"instance_name" = "GritfyWebDev-2"

"instance_type" = "t3a.nano"

"rootdisk" = {

"tags" = {

"FileSystem" = "/root"

}

"volume_size" = "30"

"volume_type" = "gp3"

}

"securitygroupids" = [

"sg-0d15a4cac0567478c",

"sg-0d8749c35f7439f3e",

]

"subnet_id" = "subnet-0f4f294d8404946eb"

}

Now everything is syntactically correct is processable in this, except the blockdisks part.

As ebs_block_device does not accept a list of volumes, unlike securitygroupids.

we need to split the blockdisks variable into multiple ebs_block_device

since we have already used for_each once inside the aws_instance resource we cannot use it once again

So we need to dynamically convert the blockdisks list to individual blocks like this

ebs_block_device

{

"device_name" = "/dev/xvdba"

"tags" = {

"FileSystem" = "/web/data"

}

"volume_size" = "10"

"volume_type" = "gp3"

}

ebs_block_device

{

"device_name" = "/dev/xvdbc"

"tags" = {

"FileSystem" = "/web/shared"

}

"volume_size" = "20"

"volume_type" = "gp3"

}

to do the same we are using the dynamic block.

the dynamic creates a code block with whatever the title we need and the content.

Inside dynamic, we can use the for_eachto iterate over the blockdisks list and create more ebs_block_device code blocks

dynamic "ebs_block_device"{

for_each = each.value.blockdisks

content {

volume_type = ebs_block_device.value.volume_type

volume_size = ebs_block_device.value.volume_size

tags = ebs_block_device.value.tags

device_name = ebs_block_device.value.device_name

}

}

Not sure how far you are able to follow this but reading it once or twice and trying it out practically might help you.

If you have any specific questions still bugging you. Please do reach out to me in the comments section. I will try to help.

The Final Validation - Terraform plan.

To understand how our tfvars and our main.tf with multiple for_each and dynamic block have transformed before it can be applied on AWS. Terraform plan is the only option

Let us run terraform plan and see how the output looks like

To see the complete result how my terraform plan looked like. please visit this link

https://gist.github.com/AKSarav/ddd73d30de8a09f4aa7082e1bbad4aa5

To reduce article length, I have kept the output of the terraform plan in GIST. you can refer.

Applying the Changes and Validating

Now it's a time to Apply our changes using Terraform.

As a best practice. please make sure you are using the right aws provisioner config and the right region before entering yes to terraform apply

Here is the execution result as a quick video clip. you can pause and refer how what my plan and the output looked like

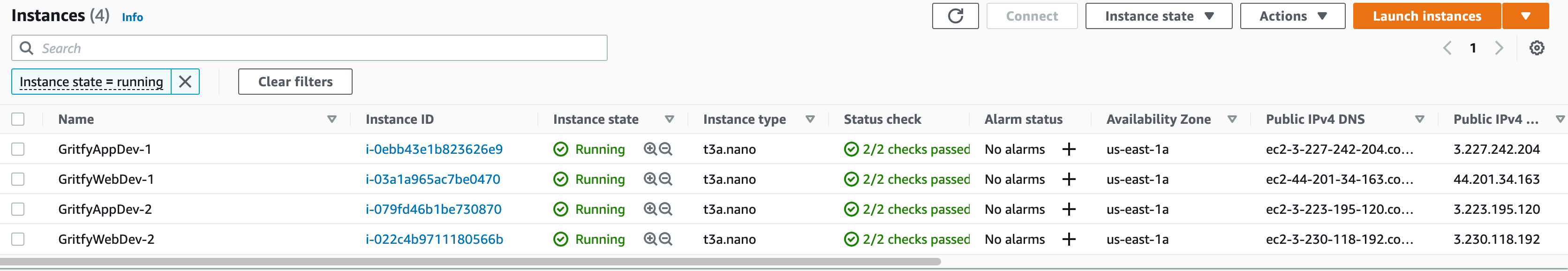

Once the terraform completes the job.

You can directly head to the AWS console to validate if the EBS volumes are created and mapped right.

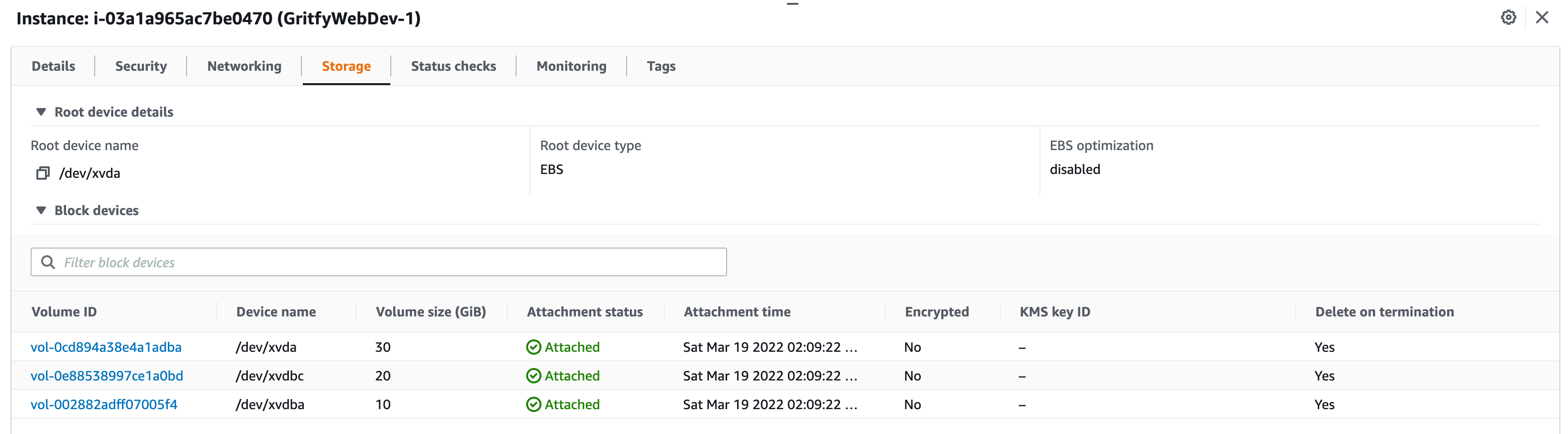

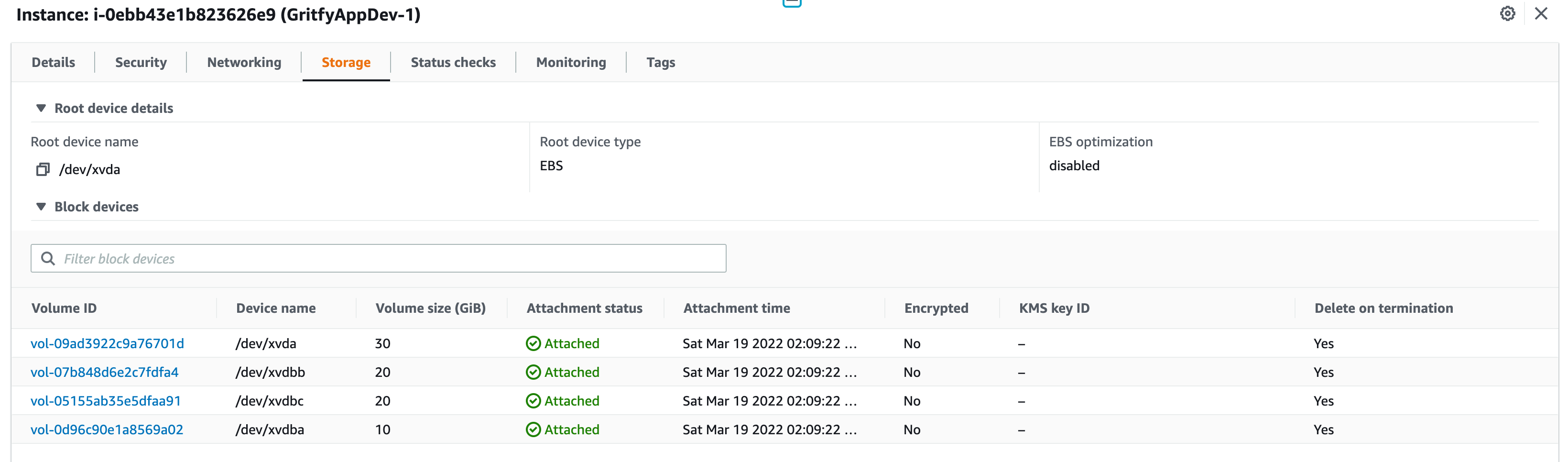

Here are the screenshots I have taken from my aws console upon completion

All Four instances are created with the right subnet and Naming convention

Here is the GritfyWebDev Server with 1 root volume and 2 EBS volumes

Here is the GritfyAppDev Server with 1 root volume and 3 EBS Volumes

All of them are created just the way we wanted.

Some Caveats and prerequisites

There are a few points that you have to remember while using ebs_block_device with terraforming and these are mostly pre-requisites too for the successful execution of your terraform manifest.

Choosing the right device_name for ebs_block_device

for ebs_block_device device_name to be mandatorily specified and you cannot give the device_name just like that. there is a standard and it varies based on the AMI you are choosing.

Refer to this article to know more about the naming standards of EBS volumes

The EBS block name within the Linux machine

Despite you are giving the device_name right. the device name would not be the disk once your Linux machines are created.

Again based on the AMI you are using, internally the name would change

Read more about the Device name limitations

EBS volumes are only attached not mounted

This terraform manifest and the ebs_block_device stops at creating the EBS volumes and attaching them to your EC2 instance.

Except for the root volume, no volumes defined under the ebs_block_device would be mounted automatically. You need to login to the machine and mount them to the right filesystem

you can use the following commands to list the disks after you login to EC2 and mount them manually

lsblk or fdisk -l

the mount command might vary based on the OS you are using.

All of this code available on Github

If you feel any issues in copying the source code from here. you can directly clone the github repo or copy the code from there

Conclusion

In this article, we have seen terraform ebs_block_device example and how to add storage volumes to aws_instance

we have also learnt how to use terraform for_each, for loop and dynamic blocks

If you have any questions or further please let us know in the comments

For any professional support and long term projects do reach out to us at [email protected]

Stay Connected

You can choose to follow me on Linked in for more articles and updates

Follow me on Linkedin My Profile Follow DevopsJunction onFacebook orTwitter For more practical videos and tutorials. Subscribe to our channel

Signup for Exclusive "Subscriber-only" Content